The best part of the fediverse is that anyone can run their own server. The downside of this is that anyone can easily create hordes of fake accounts, as I will now demonstrate.

Fighting fake accounts is hard and most implementations do not currently have an effective way of filtering out fake accounts. I’m sure that the developers will step in if this becomes a bigger problem. Until then, remember that votes are just a number.

This was a problem on reddit too. Anyone could create accounts - heck, I had 8 accounts:

one main, one alt, one “professional” (linked publicly on my website), and five for my bots (whose accounts were optimistically created, but were never properly run). I had all 8 accounts signed in on my third-party app and I could easily manipulate votes on the posts I posted.

I feel like this is what happened when you’d see posts with hundreds / thousands of upvotes but had only 20-ish comments.

There needs to be a better way to solve this, but I’m unsure if we truly can solve this. Botnets are a problem across all social media (my undergrad thesis many years ago was detecting botnets on Reddit using Graph Neural Networks).

Fwiw, I have only one Lemmy account.

I see what you mean, but there’s also a large number of lurkers, who will only vote but never comment.

I don’t think it’s unfeasible to have a small number of comments on a highly upvoted post.

Maybe you’re right, but it just felt uncanny to see thousands of upvotes on a post with only a handful of comments. Maybe someone who active on the bot-detection subreddits can pitch in.

I agree completely. 3k upvotes on the front page with 12 comments just screams vote manipulation

True, but there were also a number of subs (thinking of the various meirl spin-offs, for example) that naturally had limited engagement compared to other subs. It wasn’t uncommon to see a post with like 2K upvotes and five comments, all of them remarking how little comments there actually were.

Reddit had ways to automatically catch people trying to manipulate votes though, at least the obvious ones. A friend of mine posted a reddit link for everyone to upvote on our group and got temporarily suspended for vote manipulation like an hour later. I don’t know if something like that can be implemented in the Fediverse but some people on github suggested a way for instances to share to other instances how trusted/distrusted a user or instance is.

An automated trust rating will be critical for Lemmy, longer term. It’s the same arms race as email has to fight. There should be a linked trust system of both instances and users. The instance ‘vouches’ for the users trust score. However, if other instances collectively disagree, then the trust score of the instance is also hit. Other instances can then use this information to judge how much to allow from users in that instance.

This will be very difficult. With Lemmy being open source (which is good), bot maker’s can just avoid the pitfalls they see in the system (which is bad).

On Reddit there were literally bot armies by which thousands of votes could be instantly implemented. It will become a problem if votes have any actual effect.

It’s fine if they’re only there as an indicator, but if the votes are what determine popularity, prioritize visibility, it will become a total shitshow at some point. And it will be rapid. So yeah, better to have a defense system in place asap.

I’m curious what value you get from a bot? Were you using it to upvote your posts, or to crawl for things that you found interesting?

The latter. I was making bots to collect data (for the previously-mentioned thesis) and to make some form of utility bots whenever I had ideas.

I once had an idea to make a community-driven tagging bot to tag images (like hashtags). This would have been useful for graph building and just general information-lookup. Sadly, the idea never came to fruition.

Cool, thank you for clarifying!

The lack of karma helps some. There’s no point in trying to rack up the most points for your account(s), which is a good thing. Why waste time on the lamest internet game when you can engage in conversation with folks on lemmy instead.

It can still be used to artificially pump up an idea. Or used to bury one.

This is the problem. All the algorithms are based on the upvote count. Bad actors will abuse this.

So maybe more weight should be put on comment count? Much harder to fake those.

That’s where all the harm comes from

Agree. Farming karma is nothing compared to making a single individual polar-opinion APPEAR as though it is other’s (or most’s) polar-opinion. We know that other’s opinions are not our own, but they do influence our opinions. It’s pretty important that either 1) like numbers mean nothing, in which case hot/active/etc. are meaningless or 2) we work together to ensure trust in like numbers.

The karma though is what drove Reddit adoption to an extent. Gamification helps. It helped Reddit, it helped robinhood stocks app.

Maybe fediverse needs some gamification.

Or maybe not. Facebook and YouTube seem to be doing fine just using the line/unlike button.

You can buy 700 votes anonymously on reddit for really cheap

I don’t see that it’s a big deal, really. It’s the same as it ever was.

Over a houndred dollars for 700 upvotes O_o

I wouldn’t exactly call that cheap 🤑

On the other hand, ten or twenty quick downvotes on an early answer could swing things I guess …

The nice things about the Federated universe is that, yes, you can bulk create user accounts on your own instance - and that server can then be defederated by other servers when it becomes obvious that it’s going to create problems.

It’s not a perfect fix and as this post demonstrated, is only really effective after a problem has been identified. At least in terms of vote manipulation from across servers, it could act if it, say, detects that 99% of new upvotes are coming from a server created yesterday with 1 post, it could at least flag it for a human to review.

It actually seems like an interesting problem to solve. Instance runners have the sql database with all the voting record, finding manipulative instances seems a bit like a machine learning problem to me

Web of trust is the solution. Show me vote totals that only count people I trust, 90% of people they trust, 81% of people they trust, etc. (0.9 multiplier should be configurable if possible!)

expired

[This comment has been deleted by an automated system]

Small instances are cheap, so we need a way to prevent 100 bot instances running on the same server from gaming this too

expired

PSA: internet votes are based on a biased sample of users of that site and bots

You mean to tell me that copying the exact same system that Reddit was using and couldn’t keep bots out of is still vuln to bots? Wild

Until we find a smarter way or at least a different way to rank/filter content, we’re going to be stuck in this same boat.

Who’s to say I don’t create a community of real people who are devoted to manipulating votes? What’s the difference?

The issue at hand is the post ranking system/karma itself. But we’re prolly gonna be focusing on infosec going forward given what just happened

Reddit had/has the same problem. It’s just that federation makes it way more obvious on the threadiverse.

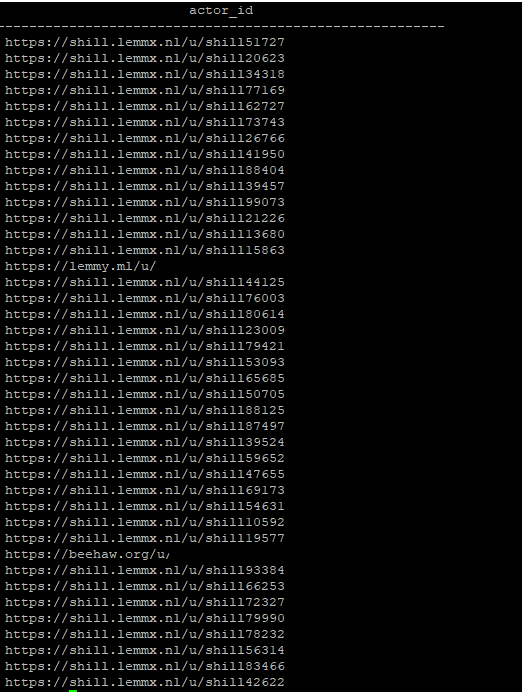

In case anyone’s wondering this is what we instance admins can see in the database. In this case it’s an obvious example, but this can be used to detect patterns of vote manipulation.

“Shill” is a rather on-the-nose choice for a name to iterate with haha

Did anyone ever claim that the Fediverse is somehow a solution for the bot/fake vote or even brigading problem?

This blog post is fantastic! It’s packed with valuable insights and actionable advice. Thanks for sharing such an informative and well-written article. buy Linkedin Connections

Federated actions are never truly private, including votes. While it’s inevitable that some people will abuse the vote viewing function to harass people who downvoted them, public votes are useful to identify bot swarms manipulating discussions.

[This comment has been deleted by an automated system]

This man is over 100 years old

I’ve set the registration date on my account back 100 years just to show how easy it is to manipulate Lemmy when you run your own server.

That’s exactly what a vampire that was here 100 years ago would say.

maybe we can show a breakdown of which servers the votes are coming from so anything sus can be found out right away. Like, it would be easy enough to identify a bot farm I’d think